News

A Stimulating Option for Spinal Cord Injury Relief

Spinal cord injuries are among the most devastating that a person can live with — as well as the most difficult to effectively treat. In addition to severely limiting sensory and motor function, damage to the spinal cord also affects the autonomic nervous system, which can result in reduced bladder control as well as dangerously high variability in blood pressure when changing postures.

Spinal cord epidural stimulation (scES), a relatively new technology that passes electrical current directly to the spinal cord, holds great promise to alleviate these problems. The hardware employed by scES is already used effectively for treating pain. However, its effectiveness is limited by key gaps in the technology — in particular, the software that controls how the stimulation is delivered.

Researchers at the Johns Hopkins Applied Physics Laboratory (APL) in Laurel, Maryland, are applying machine-learning techniques that can address these gaps, working with partners in academia — the University of Louisville and the Kessler Institute — and the medical device industry, through collaboration with Medtronic Inc., to help restore autonomy and dignity to people who live with spinal cord injuries.

Technology Gaps

Two key technological limitations must be overcome before scES can be viable as more than an exploratory treatment for the functional deficits caused by spinal cord injuries.

First, scES requires that electrodes be implanted in proximity to the spinal cord, at precise locations that are difficult to determine using the computer visualization techniques currently available to clinicians and surgeons. Second, there are no systems that can provide precise control of scES parameters for clinicians and users to effectively adapt the stimulation to their needs over time.

APL researchers are using machine-learning techniques to develop systems that address both of these problems.

Intuitive Visualization

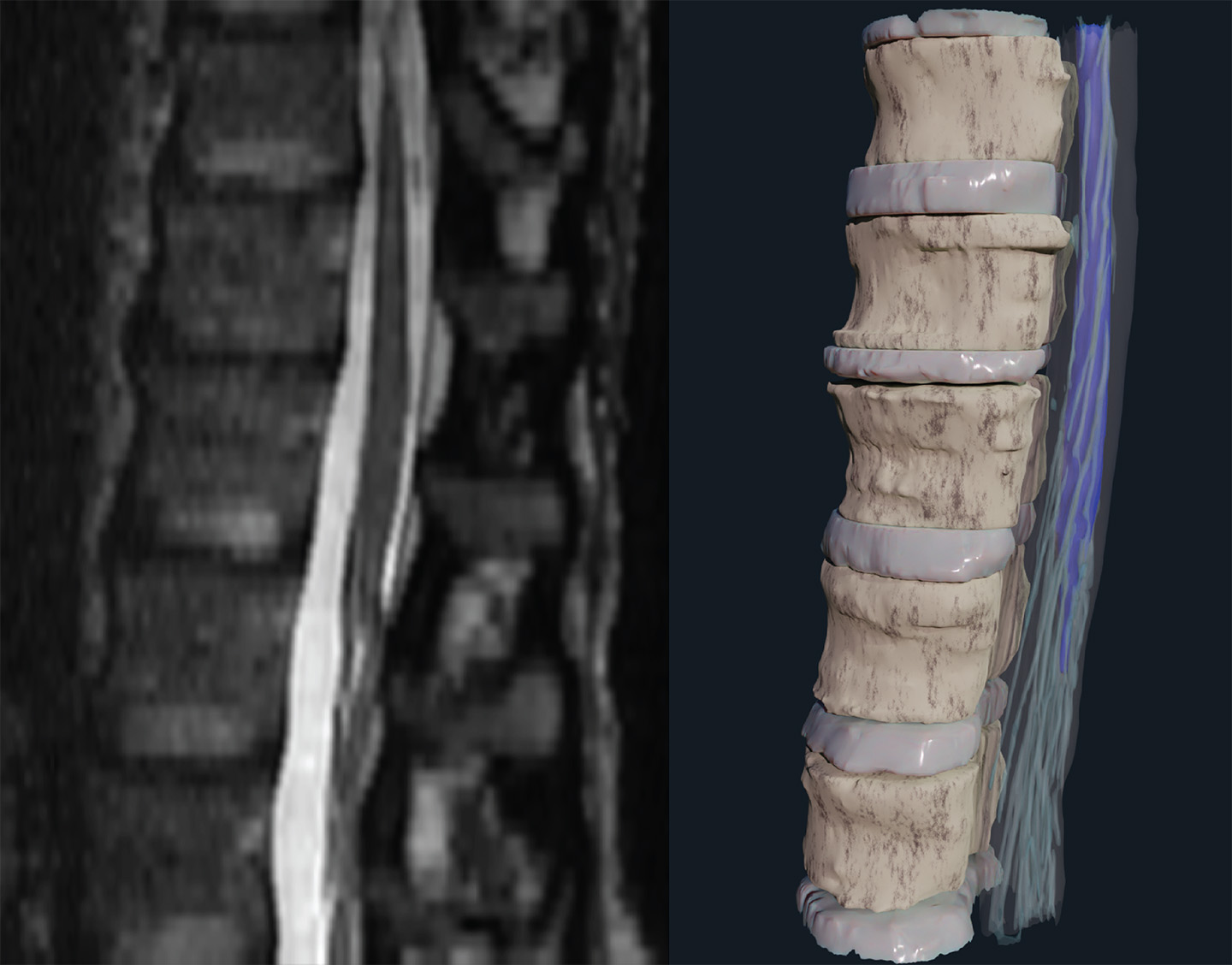

Accurately targeting a spinal cord implant remains a huge challenge. The current state of the art for visualizing the inside of the body involves looking at the 3D anatomy as 2D slices, which are then annotated by experts to provide as complete an understanding as possible of the relevant anatomy. This method is the current industry standard for clinicians and surgeons, but it doesn’t provide a user-friendly, spatially intuitive view that can be used to perform the implantation.

The APL team, with lead engineer Jordan Matelsky, developed a pipeline for converting this 2D imagery into a more natural 3D visualization that’s easy to comprehend and quick to navigate.

“In a traditional research setting, bioimaging data is supposed to take center stage, but in a medical setting, the best thing it can do is be so intuitive as to be barely noticed,” Matelsky said. “The prospect of striking that balance is a major reason our surgeon colleagues have been so enthusiastic about this new technology.”

Dynamic Stimulation

Once an scES device is implanted, the level of stimulation delivered to the spinal cord area must be carefully controlled, but it must also respond dynamically to changes — both short term, like shifts in the user’s posture, and long term, such as neural adaptations in the user’s brain and body.

Bree Christie, a neuroprosthetics research scientist, is developing algorithms that can modulate the stimulation the patients receive. Using training data from clinicians at the University of Louisville in Kentucky, and working with medical device manufacturer Medtronic, they’ve made encouraging progress.

“We’ve been taking simple data sets and testing our algorithm against the decisions made by clinicians,” Christie explained. “There are 10 parameters you can modulate with scES, and we’ve focused on modulating a single parameter to start with. So far it’s working very well — our algorithm is matching what clinicians do, which is a good start.”

Francesco Tenore, the project manager overseeing the work, noted that achieving the buy-in and trust of clinicians is a crucial part of bringing scES to patients.

“We need to take a piecemeal approach, parameter by parameter, so the clinicians know what’s happening and know they have control over it and that they can modify it as they see fit,” Tenore said. “That allows two things to happen: First, the clinician can see when the algorithm is behaving incorrectly, so they can improve it; and second, they can see when the algorithm is behaving in a way that they wouldn’t, but that isn’t necessarily incorrect. This can give them insight into how to achieve the results they want while applying fewer changes, which is ultimately the goal.”

Christie published a paper about the dynamic adjustment algorithms in a special edition of Frontiers in Neuroscience called “Bioelectronic Medicines — New Frontiers in Autonomic Neuromodulation.”

Future Directions

Looking forward, Christie said they plan to gradually incorporate more stimulation parameters into the algorithm to increase the level of complexity it can handle, and to apply machine-learning techniques to make it more robust against physical disturbances that patients encounter in the real world.

“Right now, the algorithm works well if it’s simulating a person sitting upright in a wheelchair, but one of the biggest issues faced by people in this patient population is orthostatic hypotension, a condition where their blood pressure suddenly drops when standing up from a sitting or lying position,” explained Christie. “That can lead to brain damage and strokes, among other risks, so it’s a crucial gap to address.”

Neural interface research scientists Erik Johnson and Christa Cooke are developing an Android app that can run on a tablet and communicate wirelessly with the input sensory hardware and the implanted scES system. When the algorithm has been thoroughly validated, they’ll test it with live patients, and, ultimately, the patient’s own reactions to the treatment will be incorporated as a parameter of the algorithm. The team’s work was presented in a paper at the 11th International IEEE Engineering in Medicine and Biology Society’s Conference on Neural Engineering in late April.

“We’re working on incorporating the patient into the loop of the algorithm, so they can express discomfort when the stimulation is too high, and we’ll see how the algorithm responds to that,” Johnson said. “That isn’t something that’s currently captured, but when this technology is eventually transitioned into the patient’s home, the only person who can tell clinicians what’s going on is the patient themselves, so it’s a critical thing to get right.”