Our Contribution

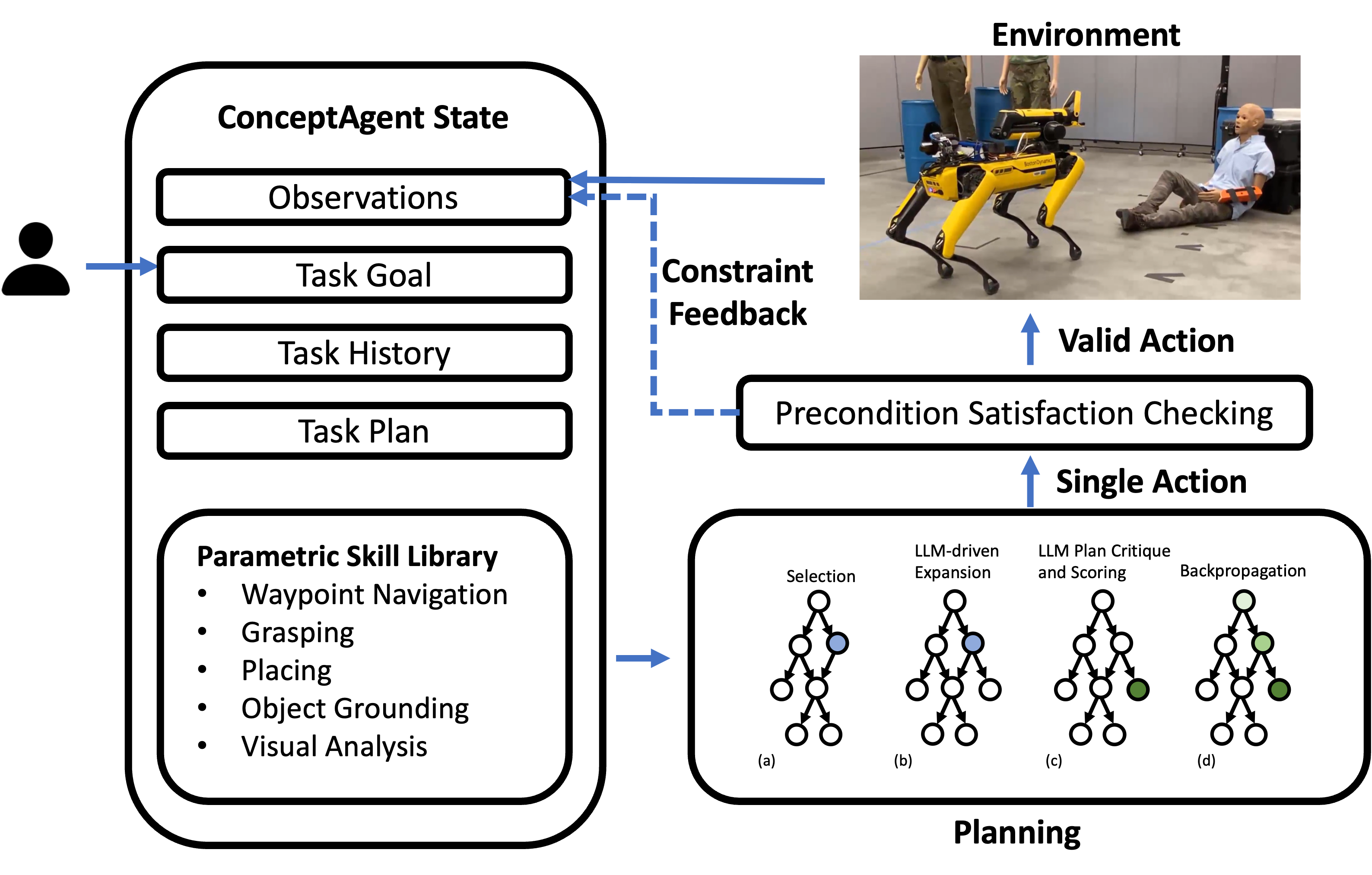

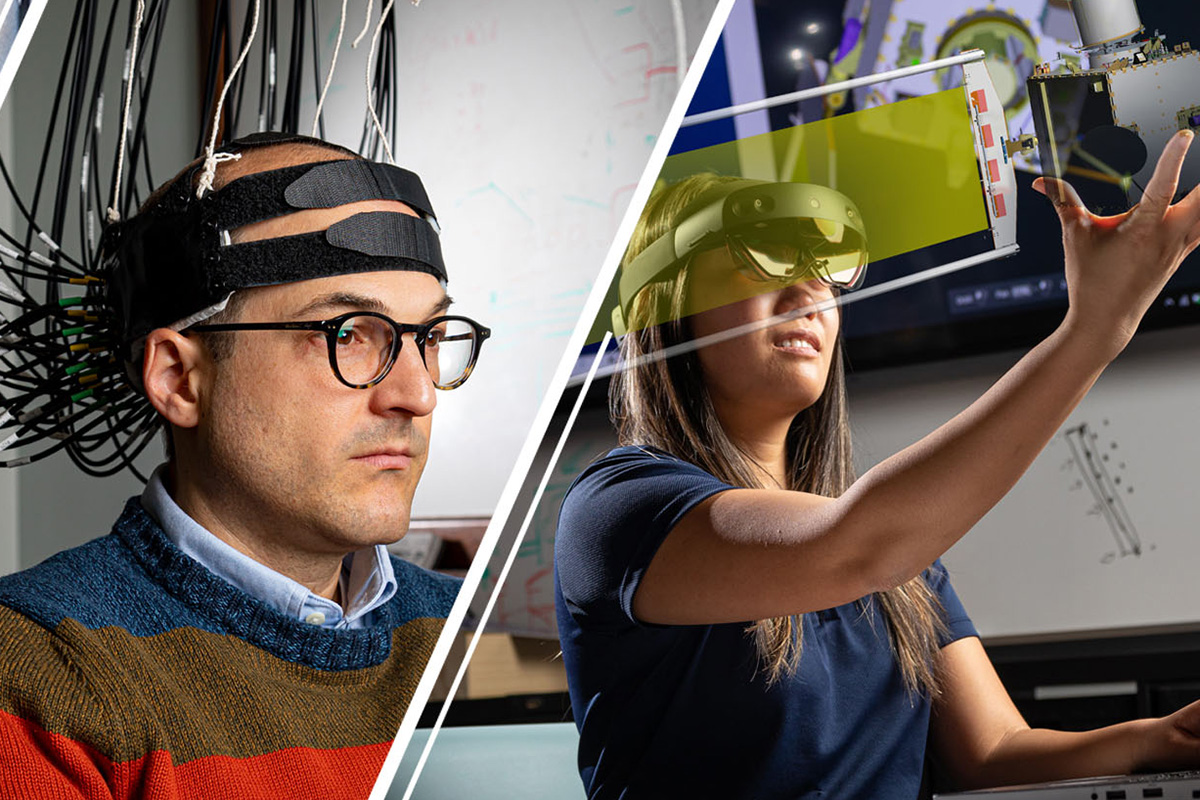

The advent of large, pretrained generative models has led to rapid advancement in artificial intelligence (AI). Scientists and engineers in APL’s Intelligent Systems Center (ISC) are at the forefront of these advances, from designing conversational AI systems to elevating possibilities for human–robot teaming. Our research focuses on domain-specific adaptations of generative models, composable AI systems, generative approaches to machine perception, red-teaming of frontier models, and digital twins of human behavior.